When teams compare Otter.ai and Geode as an Otter alternative, the discussion often starts with features: transcription accuracy, summaries, integrations.

That framing misses the real decision.

This is especially true when teams search for an Otter alternative because confidentiality—not convenience—is the primary constraint.

For confidential meetings, the cost of a recording tool is not just its price tag or feature list. It is the architectural cost—who must be trusted, what surfaces are exposed, and how risk scales as usage grows.

Otter.ai and Geode can both turn conversations into transcripts and summaries. They do so under fundamentally different architectures, and those differences carry very different long-term trade-offs.

This article looks at those trade-offs—not to declare a “winner,” but to make the costs visible.

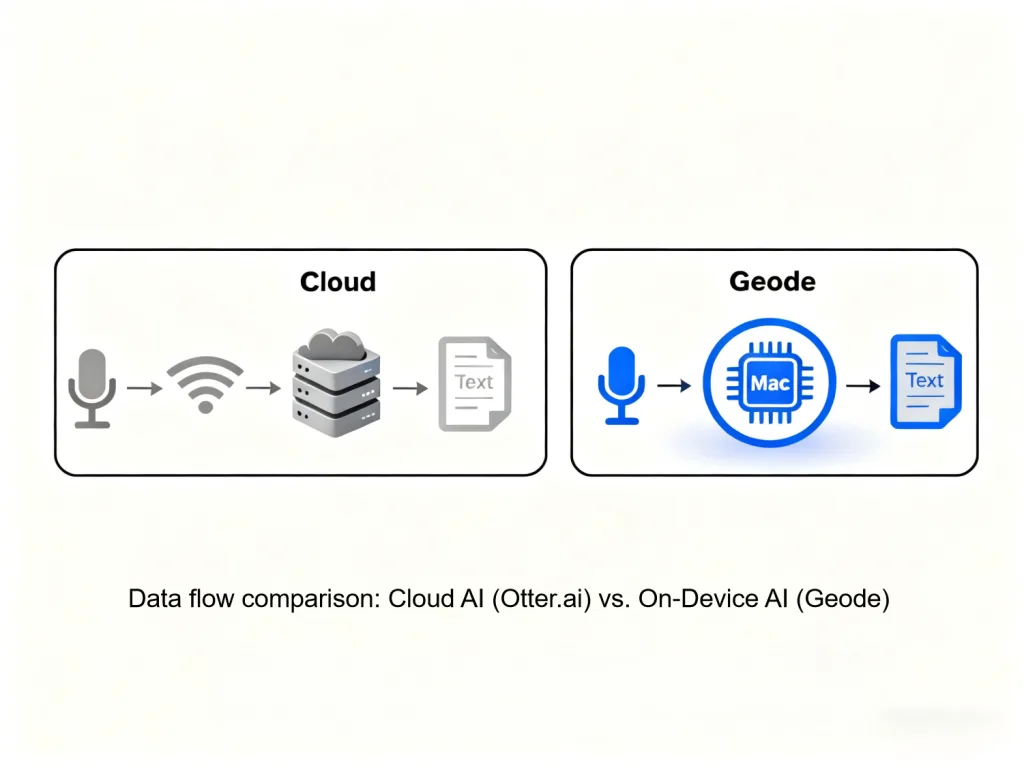

Two Ways to Turn Speech Into Text

At a high level, the distinction behind any serious Otter alternative is simple:

- Otter.ai processes audio using cloud-based infrastructure, introducing long-term cloud-based transcription risk.

- Geode runs transcription and AI summaries through on-device AI processing, directly on the user’s device.

That single difference determines where data travels, who can technically access it, and how control is enforced.

For teams actively evaluating an Otter alternative for confidential work, this architectural distinction matters more than any individual feature.

If you want a side-by-side technical breakdown, we’ve published a detailed architecture comparison of Geode vs Otter.ai that maps how cloud-based and on-device processing differ step by step. This article focuses on what those architectures imply for confidential work.

⸻

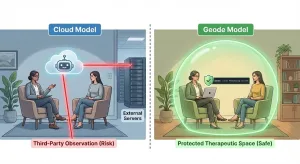

Cost #1: Trust Expansion in Cloud-Based Otter Alternatives

Cloud processing is not inherently unsafe. Many organizations rely on it every day.

But cloud architecture has a built-in property: trust must expand beyond the user’s device.

This trust expansion is the core hidden cost most teams overlook, yet it is the structural source of cloud-based transcription risk.

When a meeting is processed in the cloud:

- Audio leaves the device.

- Processing occurs in provider-controlled environments.

- Access controls are enforced through policies, permissions, and contracts.

That means the confidentiality of the meeting depends on configuration correctness rather than architecture-based privacy.

None of this implies wrongdoing. It simply reflects reality: cloud systems are designed for shared infrastructure and managed access.

On-device processing changes that equation.

When transcription and summaries run locally through local AI transcription:

- Audio does not transit external networks.

- No external processing environment exists.

- Access is limited by physical control of the device itself.

This is not a claim about intent or policy quality. It is a difference in where trust is enforced: by contracts and permissions, or by architecture-based privacy.

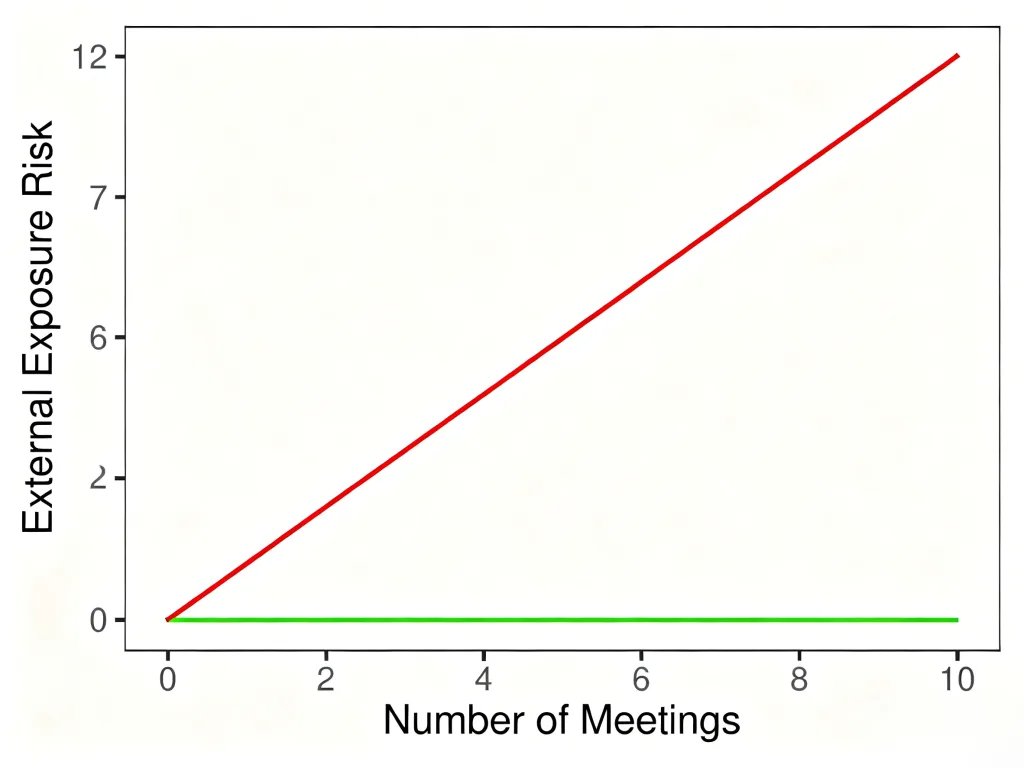

Cost #2: Scaling Risk in Managed Cloud Infrastructures

Another cost often overlooked when choosing a private meeting recorder is how risk scales as usage increases.

With cloud-based tools like Otter.ai:

- More meetings mean more data transmitted and processed externally.

- More data means a larger exposure surface and compounding cloud-based transcription risk over time.

- Usage-based pricing often encourages teams to ration or selectively record.

Again, none of this is inherently wrong. Cloud tools are optimized for collaboration, sharing, and centralized access.

But for teams handling sensitive discussions—legal strategy, executive decisions, internal investigations—the volume of recordings itself becomes a risk multiplier.

On-device models behave differently:

- Recording one meeting or one hundred does not change where processing occurs.

- There is no external system accumulating sensitive content.

- Cost does not scale with usage, because compute is tied to the device, not to minutes or tokens.

In practice, local AI transcription removes an entire class of scaling decisions from the equation: whether increased usage also increases external exposure.

The result is a different incentive structure: record freely without creating additional external risk.

Cost #3: Control Is Either Structural or Conditional

Most cloud tools frame control as a set of options:

- Permissions

- Sharing rules

- Admin policies

- Audit logs

These are valuable—and necessary—controls. But they are conditional controls: they work as long as systems and policies are configured correctly and remain unchanged.

On-device systems rely less on configuration and more on structural containment.

If processing never leaves the device:

- There is no admin console with access to raw data.

- There is no shared workspace by default.

- There is no external storage system to secure or audit.

This does not eliminate responsibility. It shifts it.

Control moves from managing access to constraining where computation happens—an approach aligned with architecture-based privacy, not policy enforcement.

In practice, many teams attempt to reduce exposure by tightening settings—disabling AI features, restricting access, or adjusting policies—within platforms that remain fundamentally cloud-based.

A common example is Zoom. While Zoom provides controls to limit certain AI features, those toggles do not change where audio is processed or whether cloud infrastructure remains part of the workflow.

We examine this configuration-versus-architecture gap in detail in:

This distinction reinforces the core theme of this article: configuration can reduce use, but architecture determines where computation—and risk—actually resides.

How This Architectural Divide Plays Out Across High-Risk Contexts

The same architectural difference that defines a true Otter alternative leads to very different outcomes depending on the context in which recordings are made.

For readers evaluating confidential meeting transcription in specific environments, the implications are explored in more detail here:

- Healthcare & HIPAA contexts

→ Is Otter.ai HIPAA Compliant? What You Need to Know

- Legal and privileged work

→ Secure Legal Transcription: Building a Case-Note Workflow Without Cloud Dependency

- Financial and MNPI-sensitive discussions

→ Four Architectural Risks Financial Teams Often Overlook When Using Cloud-Based Meeting Recorders

- Therapy and mental health settings

→How Therapists Can Safely Organize Session Notes

- EU and German organizations under GDPR

→ Why Many German Companies Are Avoiding Cloud-Based Meeting Transcription

These scenarios differ in regulation and culture—but they share the same architectural fault line.

When Otter.ai Is a Rational Choice

There are many situations where Otter.ai’s architecture is a strength:

- Large teams that prioritize real-time collaboration

- Meetings where sharing transcripts widely is the goal

- Environments where cloud processing is already accepted and governed

In those cases, centralized access and cloud workflows can be a feature, not a liability.

When Geode’s Architecture Is the Right Choice

Geode is designed for a narrower—but critical—set of use cases:

- Meetings where confidentiality is non-negotiable

- Professionals who cannot rely solely on policy-based assurances

- Teams that want AI assistance without usage-based metering

- Users who prefer their data to remain physically under their control

Geode functions as a private meeting recorder built around on-device AI processing, rather than external systems.

Geode does not eliminate responsibility or judgment. It eliminates one category of decision entirely: whether to trust an external processing pipeline with sensitive conversations.

The Real Comparison

This is not a debate about features—or about which Otter alternative has the longest checklist.

It is a choice between two cost models:

- Cloud AI: convenient, collaborative, and dependent on trust, policy, and configuration

- On-device AI: private by design, predictable, and enforced by where computation happens

Both have a place.

For confidential meetings, the difference is not subtle. It is architectural—and the cost of that choice compounds over time.

Ready to Reduce Architectural Exposure?

For a broader decision-oriented perspective on how to choose a meeting recorder for high-stakes workflows—across legal, healthcare, finance, and regulated environments—see:

→ The Confidentiality Decision Framework: How to Choose a Meeting Recorder for High-Stakes Workflows

Or explore how on-device processing changes the risk equation in practice.

[Download Geode for Mac] to experience fully on-device meeting intelligence built on local AI transcription and enforced by architecture—not promises.

Updated: Jan 9, 2026