This article is written for operational clarity, not legal advice. Enterprises operating in regulated or confidentiality-sensitive environments should validate meeting-recording workflows with their security, compliance, and legal leadership as appropriate.

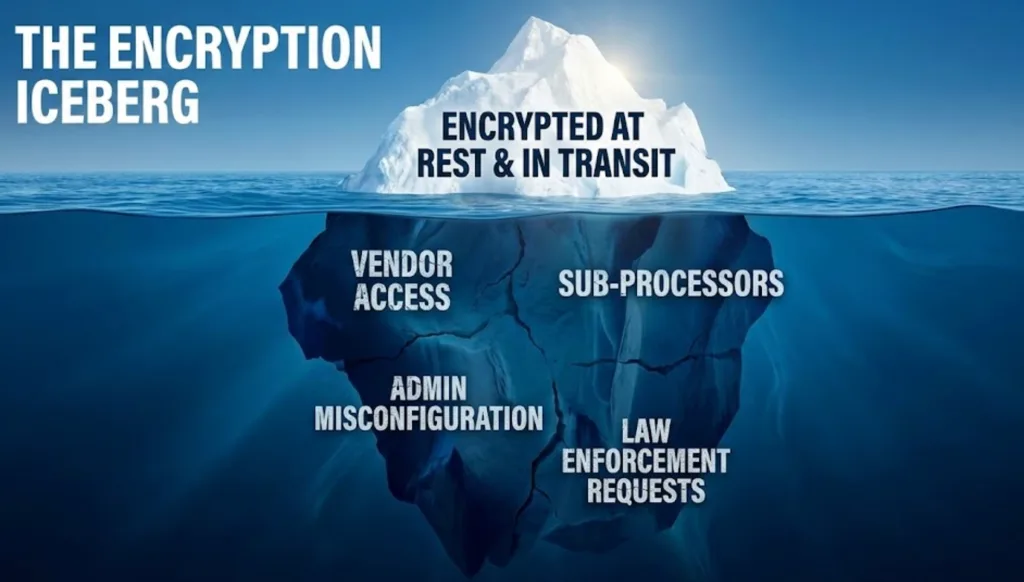

Many enterprise teams assume the main question is whether a cloud transcription vendor is “secure”—often framed as: “If it’s encrypted, we’re fine.”

Encryption is important. But it’s only one control inside a larger system.

For enterprise meeting transcription, the more fundamental question is architectural:

Where does the audio get processed—and what does that architecture force you to trust?

Encrypted cloud transcription can be workable in many environments. But local AI transcription—powered by on-device AI—often provides a cleaner, more defensible risk posture, especially when meetings include internal strategy, customer context, sensitive negotiations, or regulated data. That’s why “enterprise-grade” AI-Powered Transcription is increasingly evaluated as a security and architecture decision, not just a feature decision.

Below is a practical way to think about the trade-off.

⸻

Step 1: Understand what encryption does—and what it doesn’t

Encryption typically protects data:

- In transit (while moving over networks)

- At rest (while stored in databases or object storage)

That matters. But encryption alone does not eliminate:

- External processing (audio still leaves your environment)

- Access surfaces (admin/support/operational pathways that may exist depending on the service design)

- Policy dependencies (retention settings, sharing defaults, workspace controls)

- Processor chains (subprocessors, infrastructure providers, analytics tooling)

In other words: encryption is a powerful control—but it’s not the same thing as containment.

⸻

Step 2: The enterprise risk you’re really managing is “exposure surface”

Cloud transcription tends to create a recurring operational reality:

- Audio is uploaded

- Processing happens in vendor-controlled environments

- Transcripts/summaries may be stored externally

- Access and retention become ongoing governance tasks

Even when everything is “encrypted,” the organization still has to continuously answer:

- Who can technically access what, and through which paths?

- What is retained, for how long, and where?

- What changes when a workspace admin toggles a setting?

- What happens during support events, incidents, or migrations?

This is why many security teams don’t evaluate transcription as a feature—they evaluate it as a workflow with a living attack surface, and a non-trivial cloud encryption risk profile inside broader enterprise meeting security.

⸻

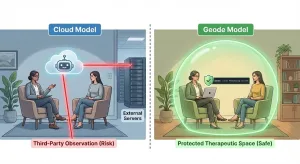

Step 3: Local AI transcription reduces entire classes of risk by changing the architecture

Local AI transcription approaches keep processing on hardware your organization controls (a managed endpoint, a secure workstation, or a controlled device fleet). That shifts the default posture from “govern cloud exposure” to “avoid creating it.”

When processing stays local, you can often reduce:

- External data transfers (fewer places audio can travel)

- Long-lived third-party repositories (fewer places transcripts can accumulate)

- Vendor access surfaces (fewer reasons to model and audit external access)

- Configuration fragility (fewer cloud settings that must remain perfect under pressure)

Stop uploading audio. The difference is physical: it’s where processing occurs.

That line isn’t a slogan—it’s a practical description of why local AI transcription (via on-device AI) can be easier to defend in audits and incident reviews. The architecture itself removes dependencies you’d otherwise need to govern forever.

⸻

Step 4: Why encrypted cloud transcription can still be the right choice sometimes

Local AI transcription isn’t automatically “better” for every enterprise scenario.

Encrypted cloud transcription workflows can make sense when:

- Teams require real-time collaboration and shared archives

- Centralized administration is a feature, not a liability

- Governance is mature (SSO, RBAC, logs, retention policies, DPIAs where relevant)

- The organization has a clear vendor-management model (including subprocessors, support processes, and contract review)

The key is to treat encrypted cloud transcription as a decision you continuously manage—rather than a box you check once.

⸻

Step 5: A practical decision rule for enterprise teams

If you’re deciding between local AI transcription and encrypted cloud transcription, ask:

1. Is the meeting content confidentiality-sensitive?

If yes, start with containment as the primary constraint.

2. Would it be acceptable for the audio and transcripts to exist outside your control as an operational norm?

If no, default to local processing.

3. Are you prepared to continuously govern access, retention, and configuration across time?

If not, prefer architectures that reduce the number of moving parts.

A useful framing is:

- Cloud encryption reduces damage if exposure happens.

- Local AI transcription reduces the chance and scope of exposure by avoiding external processing in the first place.

⸻

The takeaway

Enterprises don’t fail confidentiality-sensitive workflows because they “ignored encryption.”

They fail because they underestimated how much trust and governance a cloud architecture quietly requires.

Local AI transcription often wins in enterprise meeting transcription not because it’s “more encrypted,” but because it can be more containable—and therefore simpler to defend as systems scale.

⸻

A quiet next step

If your organization wants transcripts and summaries without building a long-lived external repository of sensitive audio, evaluate a local AI transcription workflow—built on on-device AI—where processing stays on devices you control and exposure is constrained by architecture, not settings.